从redis原理的角度认知Set命令的执行过程

说明:

- 此次案例采用的redis是cluster模式。

- 网络模型采用 epoll 模式

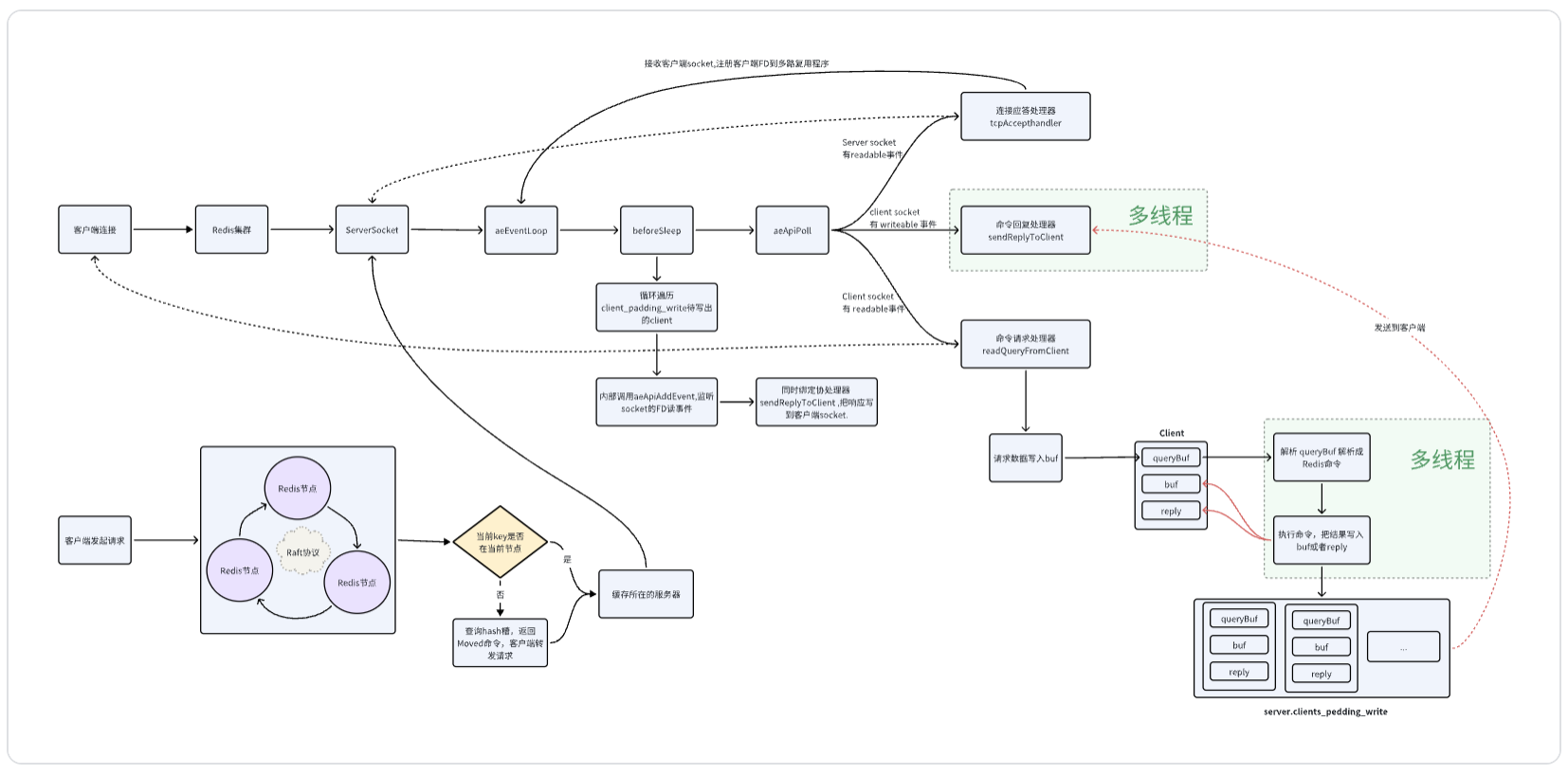

本篇文章主要讲解 ,从redis原理的角度了解一个 set 命令从redis client发出到 redis server端接收到客户端请求的时候,到底经历了哪些过程?

同样会附带了解下面几个问题

- redis的执行原理

- Redis cluster集群模式的运行原理

- 同样解释了为什么redis的速度

- epoll网络模型

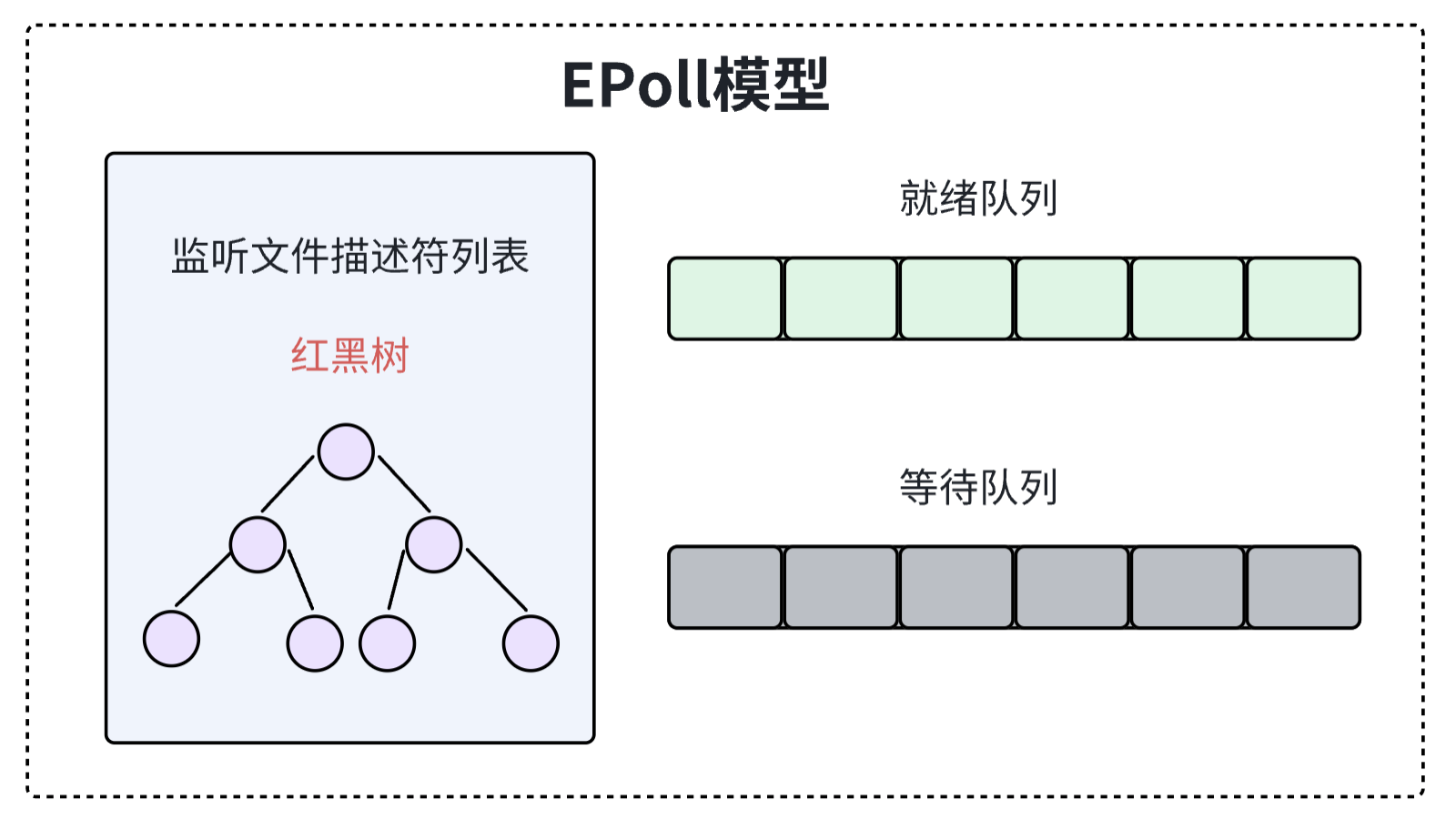

为了了解redis请求流程,首先先了解下redis的网络模型。redis 支持 4中网络模式, select、poll、epoll、kqueue ,其中epoll 模型我个人认为是应用最广泛的模型,所以本篇文章以epoll 模型为 demo 进行讲解。

Epoll网络模型

Select 和 poll 模型的缺点:

- 每次调用 Select 都需要将进程加入到所有监视 Socket 的等待队列,每次唤醒都需要从每个队列中移除,这里涉及了两次遍历,而且每次都要将整个 FDS 列表传递给内核,牵涉到用户态到内核态的转移,有一定的开销。

- select /poll 返回的值是 int 类型,使得我们不知道是那个 socket 准备就绪了,我们还需要新一轮的系统调用去检查哪一个准备就绪了。

Epoll 模型为了解决 Select ,Poll的两次轮训和每次都需要传入文件描述符的问题,对整体的结构做了一个新的优化,具体架构如下:

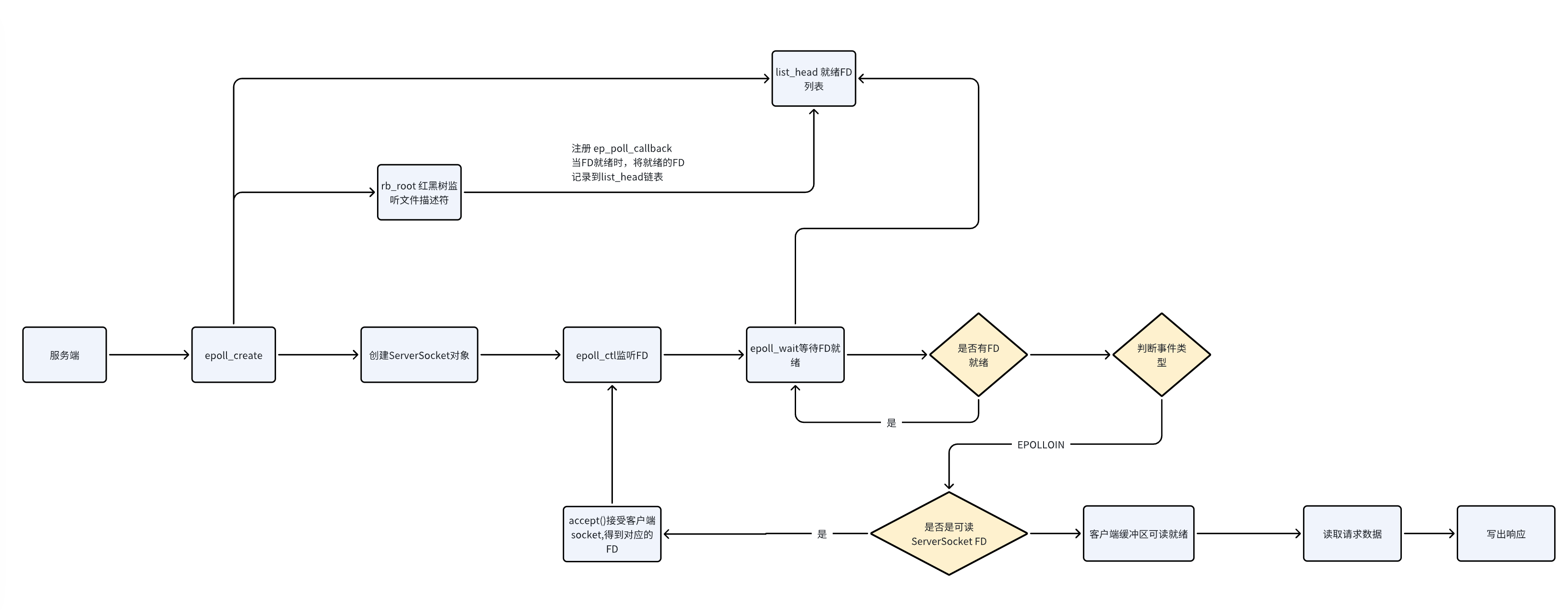

Epoll 启动具体流程如下:

- 在内核中开辟一个新的存储空间,存储文件描述符(红黑树结构),构建方法是 epoll_create()

- 使用 epoll_ctl 函数,对文件描述符进行CRUD的管理

- 使用 epoll_wait 函数阻塞线程调用,同样把调用线程放到等待队列中

Epoll 收到消息后处理流程:

不同于 select/poll 的中断和异常处理,Epoll 采用的是内核通过调度机制,将等待事件的线程从挂起状态移动到可运行状态。

在 epoll 的等待过程中,内核会监视所有被注册的文件描述符,一旦有文件描述符上发生了注册的事件,内核会将这个事件通知到 epoll 实例。具体流程如下:

- 调用 epoll_wait 的线程在 epoll 实例上等待事件的发生。这时线程被挂起,进入休眠状态。

- 当有文件描述符上发生了注册的事件,内核会将这个事件信息标记到 epoll 实例中。

- 一旦事件发生,内核会唤醒等待的线程。这是通过调度机制完成的,内核会将等待的线程移动到可运行状态。

- 等待的线程被唤醒后,epoll_wait 返回,并将事件的信息填充到用户提供的数组中,使用户程序得以处理发生的事件。

过程伪代码如下:

1 | // 用户空间代码 |

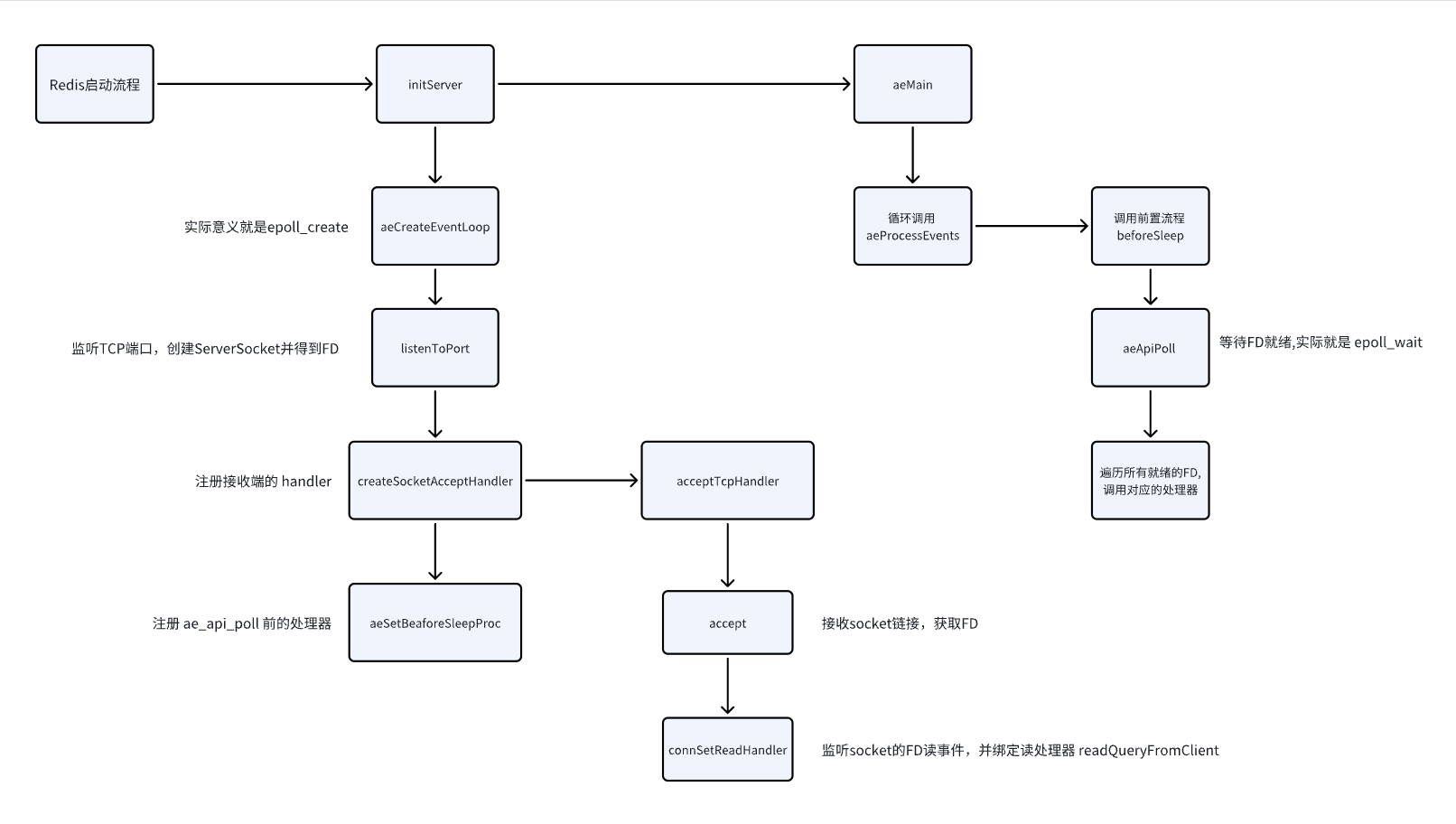

Redis server端启动

在了解完 epoll 模型的时候,那我们需要思考,在redis中是如何利用Epoll模型通信的。我们看下redis 核心启动的源码:

1 | int main(int argc, char **argv) { |

redis在启动时,有两个主要的方法,initServer 和 aeMain,其中 initServer 会有以下和epoll相关的核心流程:

- aeCreateEventLoop 创建 epoll的文件监控文件描述符列表

- listenToPort 监听指定端口

- createSocketAcceptHandler 注册对应接收事件的handler

- aeSetBeaforeSleepProc 前置处理器

aeMain 函数循环调用 aeApiPoll (相当于 epoll_wait)等待 FD 就绪。总体流程如下:

命令发送和执行

Redis Cluser 集群模式

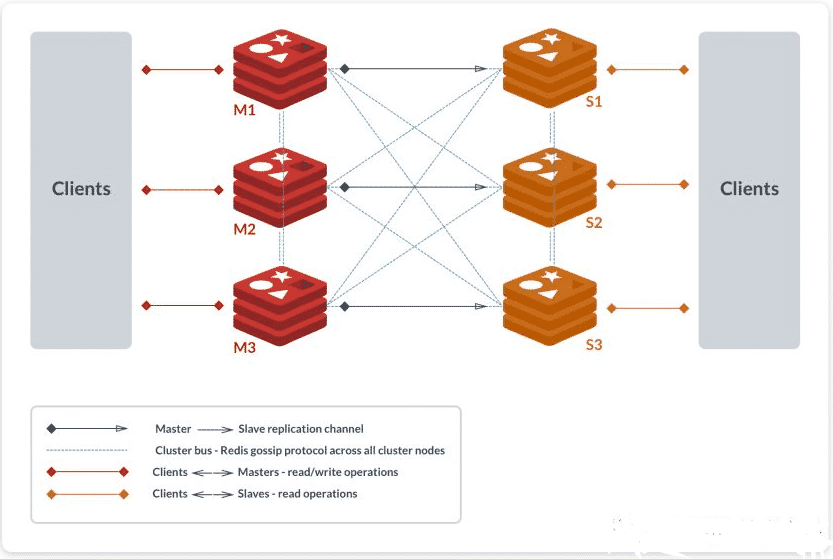

Redis 集群模式是常用的架构模式,其结构图如下:

在集群中 master 节点同步采用的 Gossip协议进行通信,保证集群内消息通信。

在 master 和 slave 同步采用定时发送数据完成。

经过上面的讨论,把Redis 相关的背景知识进行了梳理,下面开始看命令的流转。

客户端连接

当redis启动时候,Redis 已经注册了链接应答管理器(tcpAccepthandler),这个作用主要是把就绪的 fd 绑定到对应的处理器上面(readQueryFromClient),这样当FD有数据就是的时候,可以调用对应的处理器方法。

1 | void initServer(void) { |

当注册完成后,在aeMain方法中会调用 epoll_wait() 方法,具体代码流程如下:

1 | void aeMain(aeEventLoop *eventLoop) { |

命令执行

当在redis 客户端输入 set xxx aaa 这个命令后,会经历下面几个过程:

- 当 set 命令从客户端发出的时候,通过提前建立好的TCP链接,把数据发送到某一台服务器上

- 当前redis节点检测当前的这个key是否在自己服务的Hash槽中,如果不在则直接返回一个moved命令,客户端接收到moved命令,转移到指定正确的服务器中。

- 客户端把输入的命令解析和转化成 RESP协议 +SET xxx aaa\r\n

- 客户端把报文发送到 Redis 服务端,当 socket 变成可读的时候,epoll_wait 返回了就绪的fd个数

1 | retval = epoll_wait(state->epfd,state->events,eventLoop->setsize, tvp ? (tvp->tv_sec*1000 + (tvp->tv_usec + 999)/1000) : -1); |

- 循环遍历 fd 的个数,判断类型。此处这里是 EPOLLIN 事件,代表缓冲区已经可读,调用对应的函数(readQueryFromClient),具体代码如下:

1 | void readQueryFromClient(connection *conn) { |

- 读取fd内容,并解析对应的命令 set ,查询对应的命令实现:

1 | void *dictFetchValue(dict *d, const void *key) { |

- 选择对应的 set命令类,执行set命令

1 | void setCommand(client *c) { |

生成响应

执行完命令后,实现函数会生成一个响应对象,并将其添加到客户端的输出缓冲区中。这个过程通常由 addReply 系列函数完成。 对于 SET 命令,实现函数可能会生成一个 “OK” 响应并添加到输出缓冲区中。

1 | void addReply(client *c, robj *obj) { |

发送响应

当事件循环检测到输出缓冲区中有数据可以发送时,它会调用 writeToClient 函数将响应发送给客户端。

通过以上步骤,Redis 能够根据客户端发送的命令找到相应的实现函数并执行它,然后将结果发送回客户端。这个过程涉及到多个源码文件和函数,但主要逻辑在 commands.c 文件中完成。

1 | void beforeSleep(struct aeEventLoop *eventLoop) { |